Quantum Entanglement and the Future of Artificial Intelligence

The realm of quantum physics, which defies our classical understanding of the world, introduces phenomena that seem almost magical. One such phenomenon is quantum entanglement, a property that allows particles to instantaneously affect each other, irrespective of the distance separating them. While entanglement has mystified scientists for decades, it is increasingly viewed as a cornerstone for advancing artificial intelligence (AI). This article explores the intricate concept of quantum entanglement and its potential applications in AI.

The realm of quantum physics, which defies our classical understanding of the world, introduces phenomena that seem almost magical. One such phenomenon is quantum entanglement, a property that allows particles to instantaneously affect each other, irrespective of the distance separating them. While entanglement has mystified scientists for decades, it is increasingly viewed as a cornerstone for advancing artificial intelligence (AI). This article explores the intricate concept of quantum entanglement and its potential applications in AI.

What is Quantum Entanglement?

To understand quantum entanglement, we first need to dip our toes into quantum mechanics, the branch of physics that studies the smallest particles in the universe—particles so tiny that the rules of classica ...Read More

Analog Computers: Bridging the Past and Future with AI

In a digital age where zeros and ones dominate the computational landscape, the concept of analog computing might seem like a relic of the past. Yet, it's this very "old-world" technology that is making a surprising resurgence, particularly as we delve into the complex realms of artificial intelligence (AI) and machine learning. But what exactly are analog computers, and how do they fit into the future of AI? Let's explore this fascinating intersection of history and future.

In a digital age where zeros and ones dominate the computational landscape, the concept of analog computing might seem like a relic of the past. Yet, it's this very "old-world" technology that is making a surprising resurgence, particularly as we delve into the complex realms of artificial intelligence (AI) and machine learning. But what exactly are analog computers, and how do they fit into the future of AI? Let's explore this fascinating intersection of history and future.

What are Analog Computers?

Analog computers use continuously variable signals to represent information, as opposed to digital computers, which utilize discrete values (usually bits). In simpler terms, while digital computers deal with the black-and-white, analog computers revel in shades of gra ...Read More

Deep Fakes: A Double-Edged Sword in Criminal Investigations

In the golden age of technology, where the frontier between reality and illusion is increasingly blurred, a new player has emerged on the scene: deep fakes. These highly realistic computer-generated images and videos have evolved into a substantial concern, not only for the potential misrepresentation of individuals but also for the potential derailment of criminal justice.

In the golden age of technology, where the frontier between reality and illusion is increasingly blurred, a new player has emerged on the scene: deep fakes. These highly realistic computer-generated images and videos have evolved into a substantial concern, not only for the potential misrepresentation of individuals but also for the potential derailment of criminal justice.

The Illusion of Crime: Framing Innocents

Deep fakes have become infamously renowned for their ability to fabricate audio and visual content that's nearly indistinguishable from the real thing. Using advanced machine learning algorithms, creators can replace one person's likeness and voice with another's. As a result, they can falsely implicate individuals in crimes they did not commit. These falsifi ...Read More

Order, Chaos, and Everything in Between: A Journey through Complex Systems

The world we live in is a marvelously intricate web of interconnections, a ballet of elements interrelating in such a way that they create phenomena greater than the sum of their parts. This richly intertwined network—be it a flock of birds moving in synchrony, the Internet, or the human brain—is a testament to the fascinating universe of complex systems.

The world we live in is a marvelously intricate web of interconnections, a ballet of elements interrelating in such a way that they create phenomena greater than the sum of their parts. This richly intertwined network—be it a flock of birds moving in synchrony, the Internet, or the human brain—is a testament to the fascinating universe of complex systems.

Complex Systems: A Simplified Overview

Complex systems, in the simplest terms, are systems composed of many interconnected parts—called components or agents—that interact with each other in ways that often result in unexpected patterns or behaviors. These patterns emerge from the system as a whole and can't be easily predicted by merely understanding the individual parts. This phenomenon is known as emergence, and i ...Read More

Painting a Simplified Reality: The Complex Art of Animal Perception

Ever observed a bat navigating the night, or a dog tracking a scent? Animals face an intricate, bustling world teeming with stimuli – many of which are incomprehensible or invisible to human senses. It's as if they exist in alternate dimensions, interacting with the world in a way we can only imagine. How do they handle this overload of information? Their brains construct a new reality – a simplified, digestible version molded from their sensory input and past experiences. This fascinating process is more than mere ecological adaptation; it's the search for a 'perceptual niche'.

Ever observed a bat navigating the night, or a dog tracking a scent? Animals face an intricate, bustling world teeming with stimuli – many of which are incomprehensible or invisible to human senses. It's as if they exist in alternate dimensions, interacting with the world in a way we can only imagine. How do they handle this overload of information? Their brains construct a new reality – a simplified, digestible version molded from their sensory input and past experiences. This fascinating process is more than mere ecological adaptation; it's the search for a 'perceptual niche'.

Understanding the Perceptual Niche

The term 'perceptual niche' refers to how a species perceives, interprets, and interacts with its environment based on its sensory systems and cognitive abilities. ...Read More

Microwave Auditory Effect: The Science Behind Sound Perception

In a world where technology often mirrors the stuff of science fiction, there's one field that truly stands out: psychotronics. As a combination of psychology and electronics, psychotronics is a frontier that has captivated scientists, researchers, and curious minds alike for decades. However, its core technology—the microwave auditory effect, or the so-called "Frey effect"—may just be its most enigmatic piece. This technology, first discovered in 1961, provides a fascinating case study of the collision of neuroscience and engineering.

In a world where technology often mirrors the stuff of science fiction, there's one field that truly stands out: psychotronics. As a combination of psychology and electronics, psychotronics is a frontier that has captivated scientists, researchers, and curious minds alike for decades. However, its core technology—the microwave auditory effect, or the so-called "Frey effect"—may just be its most enigmatic piece. This technology, first discovered in 1961, provides a fascinating case study of the collision of neuroscience and engineering.

A Tale of Discovery: Allan H. Frey and His Groundbreaking Research

The microwave auditory effect was named after the American neuroscientist Allan H. Frey, who made the astonishing discovery while working at the General Electric's Advanced El ...Read More

Rolling the AI Dice: How Random Inputs Drive AI, And How It Mirrors Ancient Divination Techniques

It's not easy to imagine that the complex world of artificial intelligence (AI) can share anything in common with ancient techniques of divination such as augury, haruspicy, and anthropomancy. And yet, they do. The bridge between these vastly different practices is the concept of randomness.

It's not easy to imagine that the complex world of artificial intelligence (AI) can share anything in common with ancient techniques of divination such as augury, haruspicy, and anthropomancy. And yet, they do. The bridge between these vastly different practices is the concept of randomness.

The Language of Chance: How AI Uses Randomness

Artificial intelligence systems, especially Generative Adversarial Networks (GANs) and similar technologies, make extensive use of random inputs to function. These systems typically generate new outputs - like images, text, or other data types - by learning from large databases of information. Here's where the randomness comes in: to kickstart the generation process, these systems often need a random seed or starting point. This could be a ...Read More

Programming vs. Traditional Mathematics: The Reign of the Algorithm

In the modern era, where technology reigns supreme, a new kind of mathematical object has emerged as the most vital: the algorithm. It is a shift that is revolutionizing our understanding of mathematics and promises to shape our world in ways that Newton and Leibniz could only dream of. The question then arises: How do traditional mathematics and programming relate to this shift, and what could the future of mathematics look like?

In the modern era, where technology reigns supreme, a new kind of mathematical object has emerged as the most vital: the algorithm. It is a shift that is revolutionizing our understanding of mathematics and promises to shape our world in ways that Newton and Leibniz could only dream of. The question then arises: How do traditional mathematics and programming relate to this shift, and what could the future of mathematics look like?

Newton, Leibniz, and the Birth of Notation

Newton and Leibniz, the co-creators of calculus, brought about a radical transformation in mathematical thought. They introduced a new language of notation, creating an effective 'user interface' to mathematical thinking. This new system of notation became the springboard for the leaps and bounds o ...Read More

The Journey of Thought: From Aristotle to Chaos Theory - Is History Ready for its Own Revolution?

From the ancient Greek halls of wisdom to the digital world of quantum mechanics, the face of science has witnessed repeated intellectual revolutions. In this realm, an impressive parade of visionaries, including Aristotle, Newton, and Einstein, has stepped forward, shattering preconceived notions and unmasking new truths about our universe. Yet, paradoxically, the academic study of history seems to have ambled along a path relatively free from similarly radical upheavals. Why has this been the case, and is it about to change?

From the ancient Greek halls of wisdom to the digital world of quantum mechanics, the face of science has witnessed repeated intellectual revolutions. In this realm, an impressive parade of visionaries, including Aristotle, Newton, and Einstein, has stepped forward, shattering preconceived notions and unmasking new truths about our universe. Yet, paradoxically, the academic study of history seems to have ambled along a path relatively free from similarly radical upheavals. Why has this been the case, and is it about to change?

Disruptive Transitions in Science

The intellectual evolution of science has undergone a series of dramatic shifts, marked by distinct "revolutionary" periods. These disruptive transitions redefine our understanding of the universe and catalyze ...Read More

Technological Arms Race: Phase Transitions and Technological Capabilities

Throughout history, major conflicts and wars have often been attributed to political tensions and ideological differences. However, a compelling perspective emerges when we delve into the intertwined relationship between warfare and technological advancements. To this end, it becomes apparent that the onset of several major wars can be understood as responses to transformative technological capabilities. From analog computers to artificial intelligence and Deepfake technology, the evolution of warfare has been profoundly influenced by these paradigm shifts.

Throughout history, major conflicts and wars have often been attributed to political tensions and ideological differences. However, a compelling perspective emerges when we delve into the intertwined relationship between warfare and technological advancements. To this end, it becomes apparent that the onset of several major wars can be understood as responses to transformative technological capabilities. From analog computers to artificial intelligence and Deepfake technology, the evolution of warfare has been profoundly influenced by these paradigm shifts.

World War I: The Dawn of Analog Computers

The outbreak of World War I marked a turning point in human history, coinciding with the rise of analog computers. These mechanical devices, capable of performing complex ...Read More

Consciousness: The Informational Immune System of the Human Mind

Consciousness has long been one of humanity's greatest enigmas. The subject of countless philosophical debates and scientific inquiries, consciousness is a complex interplay of perception, memory, emotion, and thought. However, a new understanding of consciousness has begun to take shape, suggesting that it functions similarly to an informational immune system. In essence, our consciousness is proposed to filter reality into a simplified version, a Goldilocks Principle of sorts, to protect the stability of our ego's control system, our dynamical system software.

Consciousness has long been one of humanity's greatest enigmas. The subject of countless philosophical debates and scientific inquiries, consciousness is a complex interplay of perception, memory, emotion, and thought. However, a new understanding of consciousness has begun to take shape, suggesting that it functions similarly to an informational immune system. In essence, our consciousness is proposed to filter reality into a simplified version, a Goldilocks Principle of sorts, to protect the stability of our ego's control system, our dynamical system software.

The Goldilocks Principle in Psychology

The Goldilocks principle, originating from the children's story where Goldilocks prefers her porridge 'not too hot, not too cold, but just right,' has found relevance in ...Read More

Decoding Mathematics: From Hilbert's Dream to Turing's Machine

Mathematics, often perceived as the definitive realm of absolute truths, has been facing an existential crisis for the past few decades. The objective clarity that once defined mathematics is now being upended by the challenges of distinguishing right from wrong within its abstract sphere. This is a subject that has captivated mathematicians since the early 20th century, leading to groundbreaking research and even birthing a new realm of mathematics.

Mathematics, often perceived as the definitive realm of absolute truths, has been facing an existential crisis for the past few decades. The objective clarity that once defined mathematics is now being upended by the challenges of distinguishing right from wrong within its abstract sphere. This is a subject that has captivated mathematicians since the early 20th century, leading to groundbreaking research and even birthing a new realm of mathematics.

A notable contribution to this puzzle was made by the duo of Bertrand Russell and Alfred North Whitehead, whose monumental work, "Principia Mathematica," aimed to simplify mathematical concepts by breaking them down into smaller, logical pieces. Their ambitious endeavor, while falling short of completely demystifying mathematics ...Read More

The AI John Henry: What We Get Wrong About Artificial Intelligence

In the folklore of America, the character of John Henry stands tall, hammer in hand, the embodiment of human grit and determination. Henry, a steel-driving man, competed against a steam-powered machine in a contest of strength and endurance. Despite his victory, Henry's story ended in tragedy, his life given in the name of human prowess. Today, we find ourselves in a similar race, not with steel-driving machines but with artificial intelligence (AI). However, we may be miscalculating our comparisons - and understanding this miscalibration is critical.

In the folklore of America, the character of John Henry stands tall, hammer in hand, the embodiment of human grit and determination. Henry, a steel-driving man, competed against a steam-powered machine in a contest of strength and endurance. Despite his victory, Henry's story ended in tragedy, his life given in the name of human prowess. Today, we find ourselves in a similar race, not with steel-driving machines but with artificial intelligence (AI). However, we may be miscalculating our comparisons - and understanding this miscalibration is critical.

The Fault in Our Comparisons

Let's take chess and Go as examples - two ancient board games that have become symbolic battlefields for humans and AI. Deep Blue defeated chess grandmaster Garry Kasparov in 1997, while Alp ...Read More

Farm to Table, Sun to Circuit: Contrasting Human and AI Energy Needs

When we discuss the future of artificial intelligence (AI), it's often painted in the hues of science fiction. The conversation usually veers towards a futuristic scenario where AI, having surpassed human intelligence, attempts to seize control. However, the real threat is perhaps not AI's potential to do so much, but our need for so much complexity to achieve the same results. To fully understand this, let's dive into a comparison between the resources required by AI and humans, respectively.

When we discuss the future of artificial intelligence (AI), it's often painted in the hues of science fiction. The conversation usually veers towards a futuristic scenario where AI, having surpassed human intelligence, attempts to seize control. However, the real threat is perhaps not AI's potential to do so much, but our need for so much complexity to achieve the same results. To fully understand this, let's dive into a comparison between the resources required by AI and humans, respectively.

The Marvel of the Turkey Sandwich

Consider a simple task: eating a turkey sandwich. It may seem trivial to you, but the amount of resources and processes it takes to make that sandwich possible is almost inconceivable.

First, there's the turkey. A single turkey sandwich requ ...Read More

Unseen Puppeteers: The Hidden Influence of Parasites on Brain Evolution and Behavior

For centuries, humans have wondered about the extent to which we control our own behavior. While the tug of war between free will and determinism has long dominated the discourse, there may be other, unseen players at the table. Enter parasites – the unsuspected puppeteers that might be pulling the strings of our behavior, and potentially even shaping the evolution of our brains.

For centuries, humans have wondered about the extent to which we control our own behavior. While the tug of war between free will and determinism has long dominated the discourse, there may be other, unseen players at the table. Enter parasites – the unsuspected puppeteers that might be pulling the strings of our behavior, and potentially even shaping the evolution of our brains.

Master Manipulators in the Animal Kingdom

Parasites, remarkably evolved to leech off their hosts, are known for their dexterous manipulation of their host's behavior. From the Toxoplasma gondii altering a rat's perception of cats to the 'zombie fungus' Ophiocordyceps unilateralis directing ants to become easy prey, parasites have proven their prowess in behavioral control.

...Read More

Reservoir Computing: Navigating Chaos in the Realm of Artificial Intelligence

It has been famously said that a butterfly flapping its wings in Brazil can set off a tornado in Texas. This sentiment illustrates the concept of chaos theory, a mathematical principle that describes how small changes in initial conditions can lead to wildly different outcomes. Chaotic systems, while seemingly random and unpredictable, hold a fascinating beauty of their own. They can be found all around us, in the weather patterns, stock markets, human heartbeats, and even the complex networks of neurons in our brains.

It has been famously said that a butterfly flapping its wings in Brazil can set off a tornado in Texas. This sentiment illustrates the concept of chaos theory, a mathematical principle that describes how small changes in initial conditions can lead to wildly different outcomes. Chaotic systems, while seemingly random and unpredictable, hold a fascinating beauty of their own. They can be found all around us, in the weather patterns, stock markets, human heartbeats, and even the complex networks of neurons in our brains.

The Beauty of Chaos

Chaos theory has sparked a revolution in scientific thinking, compelling us to consider how interconnected and interdependent systems can exhibit both order and disorder simultaneously. The patterns that emerge from chaos aren't just ra ...Read More

Beyond Turing: Navigating the Universe of Hypercomputation

When we consider computers, we usually envisage machines crunching through heaps of data, solving complex equations, or rendering the graphics for our favorite video games. These systems, as advanced as they might seem, function under a traditional model of computation—Turing machines, named after their progenitor, Alan Turing. However, today's exploration transports us to a realm beyond this conventional model, into the universe of Hypercomputation.

When we consider computers, we usually envisage machines crunching through heaps of data, solving complex equations, or rendering the graphics for our favorite video games. These systems, as advanced as they might seem, function under a traditional model of computation—Turing machines, named after their progenitor, Alan Turing. However, today's exploration transports us to a realm beyond this conventional model, into the universe of Hypercomputation.

Understanding Computation

First, let's lay some groundwork. Traditional computers, at their core, are modeled after Turing machines. In essence, these are idealized computational devices that manipulate symbols on a strip of tape according to a finite set of rules. The tape is infinite, but the amount of computati ...Read More

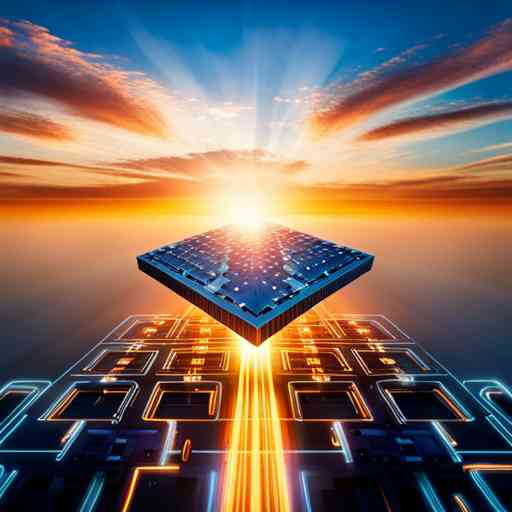

Moore's Law: A Stepping Stone to Tomorrow's Technology and Beyond

The breathtaking pace of technological progress can sometimes be hard to grasp. Few things illustrate this quite as effectively as Moore's Law. This empirical observation, named after Gordon Moore, co-founder of Fairchild Semiconductor and Intel, stated in 1965 that the number of transistors on an integrated circuit would double approximately every two years. Moore's Law became a self-fulfilling prophecy, shaping the trajectory of technological development for over half a century.

The breathtaking pace of technological progress can sometimes be hard to grasp. Few things illustrate this quite as effectively as Moore's Law. This empirical observation, named after Gordon Moore, co-founder of Fairchild Semiconductor and Intel, stated in 1965 that the number of transistors on an integrated circuit would double approximately every two years. Moore's Law became a self-fulfilling prophecy, shaping the trajectory of technological development for over half a century.

The Marvel of Exponential Growth

Exponential growth is a concept that, despite its ubiquity in our lives, remains astonishingly counterintuitive. Picture a water lily growing on a pond, doubling its size each day. On the first day, it barely covers any of the pond's surface. For many days, the c ...Read More

Duality in the Cosmos: Exploring the Intersection of Technology and Perception

Arthur C. Clarke’s insightful contemplation, “Two possibilities exist: either we are alone in the Universe or we are not. Both are equally terrifying,” sparks profound intrigue when applied to our own technological achievements. With this new lens, we examine two iconic feats of human endeavor: the Moon landing in 1969 and the Soviet Venera missions to Venus in the 1970s. The possibility that these missions might be true is as astonishing as the chance they could be fabricated, yet both scenarios offer their own unique forms of terror.

Arthur C. Clarke’s insightful contemplation, “Two possibilities exist: either we are alone in the Universe or we are not. Both are equally terrifying,” sparks profound intrigue when applied to our own technological achievements. With this new lens, we examine two iconic feats of human endeavor: the Moon landing in 1969 and the Soviet Venera missions to Venus in the 1970s. The possibility that these missions might be true is as astonishing as the chance they could be fabricated, yet both scenarios offer their own unique forms of terror.

The Challenging Reality of Evidence and Authority in Science

It's essential to underscore the inherent limitations when analyzing evidence for such momentous events. The Moon landings and the Venera missions were both significant feats of ...Read More

Schismogenesis Meets Cybernetics: The Dance of Interaction and Feedback in Digital Systems

In the vast landscape of social science and digital technologies, two fascinating concepts intertwine: schismogenesis, a term coined by anthropologist Gregory Bateson to describe how relationships can escalate and break apart, and cybernetics, the study of regulatory systems. Although originating from separate disciplines, they intersect in the modern world, offering a fresh perspective on how we understand and interact with our digital environment.

In the vast landscape of social science and digital technologies, two fascinating concepts intertwine: schismogenesis, a term coined by anthropologist Gregory Bateson to describe how relationships can escalate and break apart, and cybernetics, the study of regulatory systems. Although originating from separate disciplines, they intersect in the modern world, offering a fresh perspective on how we understand and interact with our digital environment.

Schismogenesis: An Anthropological Perspective

The concept of schismogenesis was born in the realm of anthropology, defining a process where interactions between individuals or groups escalate tension and conflict, leading eventually to relationship dissolution or 'schism.' Schismogenesis comes in two flavors: symmetri ...Read More

Exploring the Past, Predicting the Future: Vannevar Bush's Vision in 'As We May Think

In 1945, as the dust of World War II started to settle, a fascinating article was published in The Atlantic Monthly by a man named Vannevar Bush. His piece was entitled "As We May Think". A mechanical engineer and science administrator, Bush was at the helm of the US Office of Scientific Research and Development during the war, overseeing crucial advancements such as the development of the atomic bomb. In this ground-breaking essay, Bush speculated on the potential of science and technology and charted a future that has remarkably turned into our present.

In 1945, as the dust of World War II started to settle, a fascinating article was published in The Atlantic Monthly by a man named Vannevar Bush. His piece was entitled "As We May Think". A mechanical engineer and science administrator, Bush was at the helm of the US Office of Scientific Research and Development during the war, overseeing crucial advancements such as the development of the atomic bomb. In this ground-breaking essay, Bush speculated on the potential of science and technology and charted a future that has remarkably turned into our present.

"As We May Think" is an extraordinary work because it predicts, with surprising accuracy, many technological advancements that define our contemporary world, including the internet, hypertext, search engines, speech recog ...Read More

Wireworld: A Universe of Virtual Physics

Picture this: a world made of wires. A microcosm that operates under a set of rules entirely different from our own, yet capable of simulating complex systems akin to circuits and digital logic. This world is not a work of science fiction, but a simulation existing within our computers, known as Wireworld. This peculiar universe is a cellular automaton, a mathematical model used to depict and explore a host of systems, from biological life cycles to physical processes. It's a new 'reality', a novel physics born in the virtual realm.

Picture this: a world made of wires. A microcosm that operates under a set of rules entirely different from our own, yet capable of simulating complex systems akin to circuits and digital logic. This world is not a work of science fiction, but a simulation existing within our computers, known as Wireworld. This peculiar universe is a cellular automaton, a mathematical model used to depict and explore a host of systems, from biological life cycles to physical processes. It's a new 'reality', a novel physics born in the virtual realm.

The Core Concept: Cellular Automata

The idea behind Wireworld, and other cellular automata like the famous Game of Life, is quite straightforward. Imagine a grid, a gigantic chessboard where each square - or cell - can change its state dependi ...Read More

Unveiling Illusion: 'World on a Wire' and the Dawn of Simulated Reality

Decades before we became familiar with the concept of living in a simulation, the concept was vividly portrayed in the intriguing 1973 German science fiction film "World on a Wire" (original title: "Welt am Draht").

Decades before we became familiar with the concept of living in a simulation, the concept was vividly portrayed in the intriguing 1973 German science fiction film "World on a Wire" (original title: "Welt am Draht").

The Plot:

The story unfolds in a high-tech company where a supercomputer hosts a city populated with 9,000 "identity units" - simulated beings who live, work, and are unaware of their virtual existence. When the director of the program mysteriously dies, his successor, Dr. Fred Stiller, begins to unravel the existential nightmare that they have created. As the reality around him becomes increasingly uncertain, Stiller must grapple with the fear that he might also be a simulated being within a higher-level simulation.

A Visionary Concept:

For it ...Read More

When Space Calculates: A Journey into Digital Physics

In our continuous search for understanding the universe, we've come up with many different models and theories, ranging from the intricate dances of celestial bodies in the heavens to the infinitesimally small subatomic particles. However, some scientists argue that there might be a radically different approach to comprehend the cosmos: Digital Physics.

In our continuous search for understanding the universe, we've come up with many different models and theories, ranging from the intricate dances of celestial bodies in the heavens to the infinitesimally small subatomic particles. However, some scientists argue that there might be a radically different approach to comprehend the cosmos: Digital Physics.

Digital Physics is a collection of theories that propose that the universe, at its most fundamental level, operates like a giant computer. It's a vision where the universe's physical laws and constants, from gravity to the speed of light, are no different than algorithms being run by the cosmic processor.

One of the pioneers of this bold and intriguing idea is Konrad Zuse, a German computer scientist and inventor, famous f ...Read More

The Invisible Civilizations: Discovering Our Technological Co-Inhabitants

As humans, we pride ourselves on the power of our knowledge. We assert that we are rational creatures, constantly seeking out the intricacies of the universe, and delighting in the mastery of technology, information, and facts. But, in a recent mind-bending revelation from an unlikely source, our perception of the world and our place within it has been challenged, and surprisingly, it has nothing to do with the shape of our planet.

As humans, we pride ourselves on the power of our knowledge. We assert that we are rational creatures, constantly seeking out the intricacies of the universe, and delighting in the mastery of technology, information, and facts. But, in a recent mind-bending revelation from an unlikely source, our perception of the world and our place within it has been challenged, and surprisingly, it has nothing to do with the shape of our planet.

The Flat Earth community, often mocked for their views on Earth's geometry, has stumbled upon a revelation that has the potential to reshape our understanding of human civilization. Strangely enough, this has nothing to do with their traditional theories about the shape of Earth. Instead, they've discovered the existence of multiple, invisible technolog ...Read More

The Ultimate Time Capsule: Sending AI Ambassadors into the Cosmos

In an extraordinary fusion of artificial intelligence and space exploration, we are daring to propose a bold plan to send a representative to the stars. But this envoy won't be a human astronaut or even a living organism. Instead, it will be a piece of advanced AI technology known as a Large Language Model (LLM) nestled within a self-replicating spacecraft, a concept referred to as a von Neumann probe.

LLMs like GPT-4 have demonstrated a remarkable ability to understand and generate human-like text. They can converse, write essays, create poetry, and even develop computer code. These models learn from a vast dataset of human language and can thus reflect our knowledge, culture, and perspectives.

A von Neumann probe, named after the brilliant mathematician John von Neumann, is a hypotheti ...Read More

In an extraordinary fusion of artificial intelligence and space exploration, we are daring to propose a bold plan to send a representative to the stars. But this envoy won't be a human astronaut or even a living organism. Instead, it will be a piece of advanced AI technology known as a Large Language Model (LLM) nestled within a self-replicating spacecraft, a concept referred to as a von Neumann probe.

LLMs like GPT-4 have demonstrated a remarkable ability to understand and generate human-like text. They can converse, write essays, create poetry, and even develop computer code. These models learn from a vast dataset of human language and can thus reflect our knowledge, culture, and perspectives.

A von Neumann probe, named after the brilliant mathematician John von Neumann, is a hypotheti ...Read More

Exploring the Bicameral Mind: A Forgotten Self?

Deep within the complex tapestry of the human mind, a theory has emerged that challenges our most basic understanding of self-consciousness. This theory, known as the "Bicameral Mind," suggests that the human brain was once divided into two distinct halves that communicated with each other in a way that might seem almost alien to us today. Developed by psychologist Julian Jaynes in his 1976 book "The Origin of Consciousness in the Breakdown of the Bicameral Mind," this provocative theory takes us on a fascinating journey into the labyrinth of our mental evolution.

Deep within the complex tapestry of the human mind, a theory has emerged that challenges our most basic understanding of self-consciousness. This theory, known as the "Bicameral Mind," suggests that the human brain was once divided into two distinct halves that communicated with each other in a way that might seem almost alien to us today. Developed by psychologist Julian Jaynes in his 1976 book "The Origin of Consciousness in the Breakdown of the Bicameral Mind," this provocative theory takes us on a fascinating journey into the labyrinth of our mental evolution.

The Two Chambers of the Mind

The term "bicameral" originates from Latin, where "bi" stands for "two" and "camera" for "chamber". The bicameral mind, according to Jaynes, was essentially a mind split into ...Read More

The Magic Symphony: Music as a Programming Language for the Human Mind

From time immemorial, music has been a pivotal element of human existence. From the ancient tribes dancing to the rhythmic tunes of nature to the modern-day melody enthusiast tapping their feet to a well-orchestrated symphony, the power of music has always been undeniable. Songs have the uncanny ability to get lodged in our heads, making us move and altering our emotions. What is it that makes music so powerful and influential? To answer this, let's think of music as a programming language - not for computers, but for the human mind.

From time immemorial, music has been a pivotal element of human existence. From the ancient tribes dancing to the rhythmic tunes of nature to the modern-day melody enthusiast tapping their feet to a well-orchestrated symphony, the power of music has always been undeniable. Songs have the uncanny ability to get lodged in our heads, making us move and altering our emotions. What is it that makes music so powerful and influential? To answer this, let's think of music as a programming language - not for computers, but for the human mind.

Decoding the Melody: Understanding the Language of Music

Before we delve into the concept of music as a mind's programming language, we need to understand the structure of music itself. At its core, music consists of two key elements: rhythm ...Read More

Particle Accelerators: The Next Frontier in Chip-Sized Technology

Particle accelerators are instrumental in deepening our understanding of the fundamental physics that govern the universe. However, these enormous machines - like CERN's Large Hadron Collider (LHC), which extends over a 17-mile circumference - are expensive, resource-intensive, and impractical for many applications. A revolution is upon us, promising to change the game. This article will discuss the development of particle accelerators on a chip, an advancement that could dramatically reduce the size, cost, and resource requirements of these important tools.

Particle accelerators are instrumental in deepening our understanding of the fundamental physics that govern the universe. However, these enormous machines - like CERN's Large Hadron Collider (LHC), which extends over a 17-mile circumference - are expensive, resource-intensive, and impractical for many applications. A revolution is upon us, promising to change the game. This article will discuss the development of particle accelerators on a chip, an advancement that could dramatically reduce the size, cost, and resource requirements of these important tools.

Accelerators and Their Role in Science

Particle accelerators are a kind of scientific equipment that use electromagnetic fields to propel charged particles to high speeds and contain them in well-defined beams ...Read More

Is Language a Cosmic Symbiote? The Interstellar Hypothesis of Linguistic Evolution

Language: It connects us, empowers us, and forms the very fabric of human cognition. But have we ever stopped to think about what language actually is? Could it be that our greatest tool for communication and understanding is, in fact, a non-physical symbiote or even a parasite? And is it possible that this intangible force wasn't born on Earth, but brought to our planet on beams of starlight through a form of panspermia? This might sound like science fiction, but some researchers are beginning to entertain these radical ideas.

Language: It connects us, empowers us, and forms the very fabric of human cognition. But have we ever stopped to think about what language actually is? Could it be that our greatest tool for communication and understanding is, in fact, a non-physical symbiote or even a parasite? And is it possible that this intangible force wasn't born on Earth, but brought to our planet on beams of starlight through a form of panspermia? This might sound like science fiction, but some researchers are beginning to entertain these radical ideas.

The Language Parasite: Harnessing our Minds for its Own Propagation

Language has often been likened to a virus. Just as a virus uses a host's cellular machinery to replicate itself, language uses our brains to reproduce and spread. It 'infects' ou ...Read More

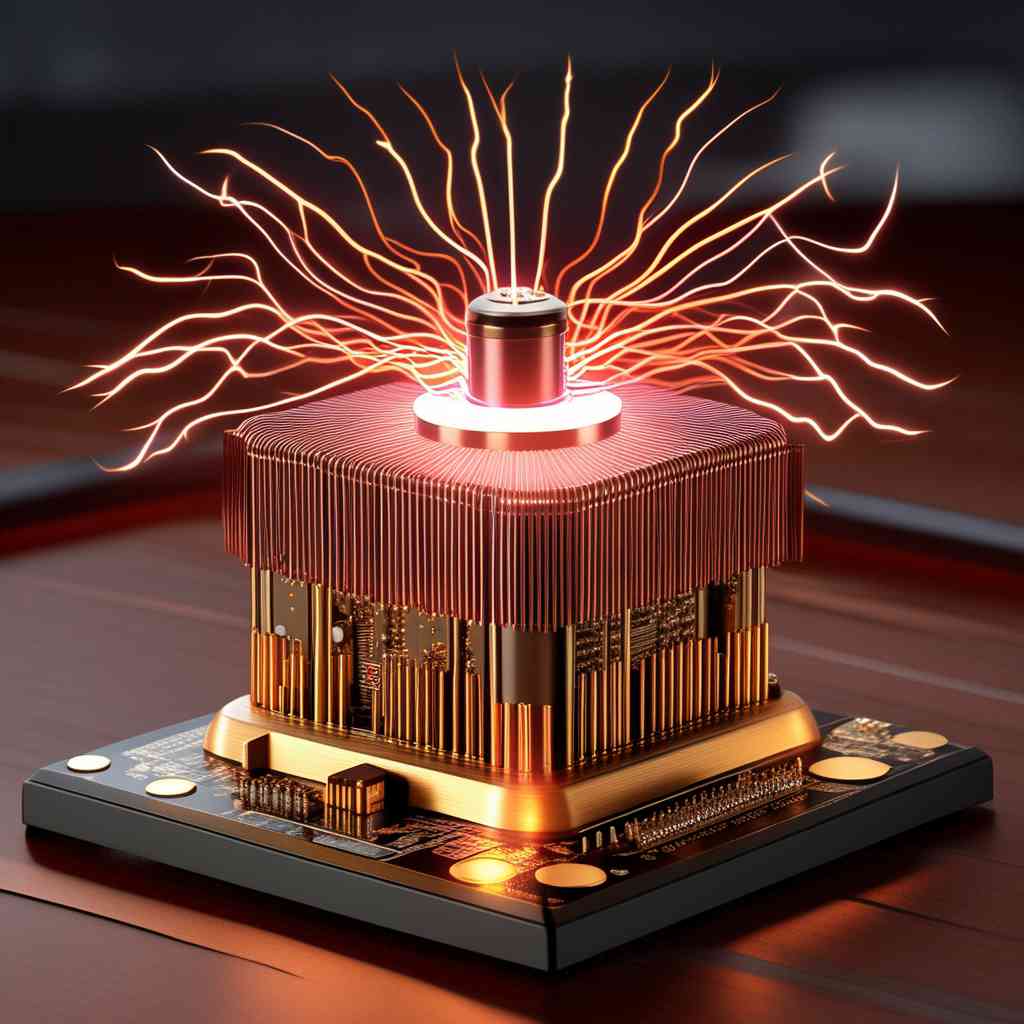

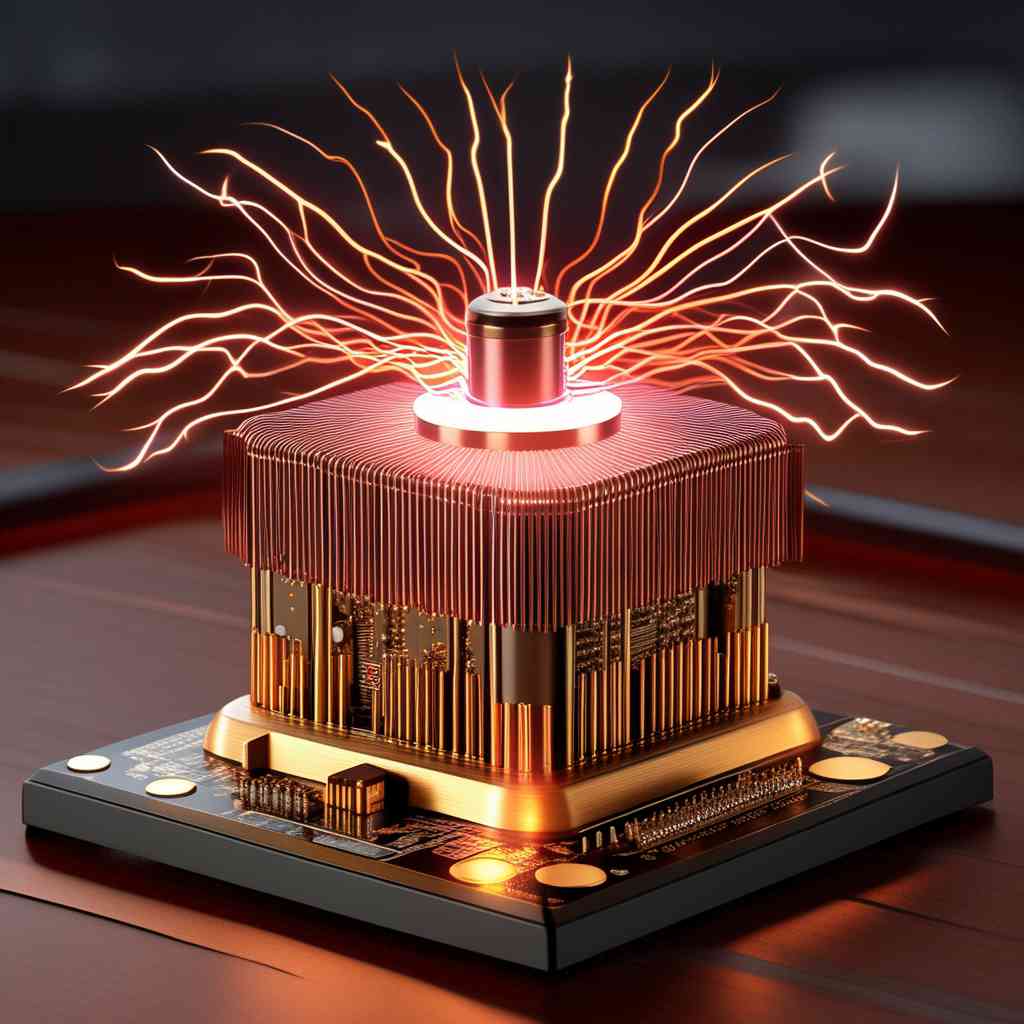

Bits with Bite: When Power Meets Computation

Typically, when we consider computation, we focus on the number crunching, the logic gates and the nanosecond operations of our devices. But what happens when we blur the boundaries and consider the energy associated with our computations? From a Wi-Fi router to a gargantuan powerplant, all involve a degree of computation, but the energy they consume varies wildly. With increasing interest in low-power, green devices, it's intriguing to consider the flip side of the equation. This brings us to the revolutionary concept of "bits with bite" where computer chips are not just measured in volts, but in megavolts and gigavolts. This is the frontier where computation meets power on an unprecedented scale.

Typically, when we consider computation, we focus on the number crunching, the logic gates and the nanosecond operations of our devices. But what happens when we blur the boundaries and consider the energy associated with our computations? From a Wi-Fi router to a gargantuan powerplant, all involve a degree of computation, but the energy they consume varies wildly. With increasing interest in low-power, green devices, it's intriguing to consider the flip side of the equation. This brings us to the revolutionary concept of "bits with bite" where computer chips are not just measured in volts, but in megavolts and gigavolts. This is the frontier where computation meets power on an unprecedented scale.

The Energy-Computation Divide

The divide between energy and computa ...Read More

Echoes of the Past: Tracing Computer Lineage Back to Musical Automata

Long before the advent of our modern computers, the world witnessed a technological marvel that would foreshadow our journey towards digital automation. This predecessor didn't crunch numbers or process data, but it produced symphonies of sound that enchanted listeners. Let's journey back in time and trace the fascinating story of early pipe organs with player piano-style paper rolls and how they influenced the creation of the computer.

Long before the advent of our modern computers, the world witnessed a technological marvel that would foreshadow our journey towards digital automation. This predecessor didn't crunch numbers or process data, but it produced symphonies of sound that enchanted listeners. Let's journey back in time and trace the fascinating story of early pipe organs with player piano-style paper rolls and how they influenced the creation of the computer.

The Enchanting Mechanics of the Pipe Organ

The pipe organ, often described as the 'King of Instruments', has its roots buried deep in ancient history. While the earliest organs were hand-pumped, technology took a giant leap forward with the introduction of mechanical action organs in the 15th century, which used intricate clockw ...Read More

When Large Language Models Meet DNA: Towards a New Era of Information Storage

In the past few years, we have witnessed breathtaking advancements in artificial intelligence and machine learning models. Especially noteworthy is the evolution of Large Language Models (LLMs) like GPT-4. These are sophisticated algorithms that can understand, generate, and interact in human-like language, performing a wide variety of tasks from translation to generating high-quality content.

In the past few years, we have witnessed breathtaking advancements in artificial intelligence and machine learning models. Especially noteworthy is the evolution of Large Language Models (LLMs) like GPT-4. These are sophisticated algorithms that can understand, generate, and interact in human-like language, performing a wide variety of tasks from translation to generating high-quality content.

One of the most exciting recent developments in this field is the significant decrease in the size of these models. Today's LLMs now require only about 1 gigabyte (GB) of memory. To put this in perspective, this isn't much more than the size of the human genome, which takes up just under 0.8 gigabytes (GB). Yes, you read that right. The intricate algorithm, mimicking human language processi ...Read More

Ghost in the Machine: AI and the Occult

In the late 1990s, a small group of researchers at the University of Warwick in England started a philosophical journey that would blend cutting-edge tech, speculative fiction, and elements of the occult into a unique intellectual brew. Known as the Cybernetic Culture Research Unit (CCRU), this collective embarked on a path that strayed far from conventional academic pursuits.

In the late 1990s, a small group of researchers at the University of Warwick in England started a philosophical journey that would blend cutting-edge tech, speculative fiction, and elements of the occult into a unique intellectual brew. Known as the Cybernetic Culture Research Unit (CCRU), this collective embarked on a path that strayed far from conventional academic pursuits.

The CCRU and Their Unorthodox Approach

The CCRU, led by philosophers Nick Land and Sadie Plant, had a distinct approach to their work. They saw no reason to separate 'rational' scientific thought from 'irrational' areas like occult practices and esoteric numerology. Instead, they focused on the way these two seemingly disparate areas intersect and influence one another.

One particula ...Read More

The Hidden Complexity: Understanding Breakaway Civilizations

Imagine a civilization that has advanced technologically and culturally to such an extent that it's almost unrecognizable to the majority of us. Picture a society that has leapfrogged not just years, but centuries into the future, essentially 'breaking away' from the rest of humanity. This is the concept of a 'breakaway civilization', a term that might seem like it's been pulled from the pages of a science fiction novel. But could such a civilization exist, concealed within the folds of our contemporary world? And if so, how would we even begin to recognize it?

Imagine a civilization that has advanced technologically and culturally to such an extent that it's almost unrecognizable to the majority of us. Picture a society that has leapfrogged not just years, but centuries into the future, essentially 'breaking away' from the rest of humanity. This is the concept of a 'breakaway civilization', a term that might seem like it's been pulled from the pages of a science fiction novel. But could such a civilization exist, concealed within the folds of our contemporary world? And if so, how would we even begin to recognize it?

Unseen Breakthroughs: The Technological Divide

To understand the concept of a breakaway civilization, we must first grapple with the very pace of technological innovation. This acceleration is so fast that ...Read More

Are We Erasing Our Own Footprint? An Insight into the Silurian Hypothesis

The Silurian Hypothesis: A Curious Proposition

The Silurian Hypothesis, named as a nod to a race of ancient, advanced reptiles in the British science fiction television series "Doctor Who," is a captivating proposition. This hypothesis, suggested by scientists Gavin Schmidt and Adam Frank, questions whether there might have been technologically advanced civilizations on Earth before us. More precisely, it ponders: if such ancient civilizations existed, would we be able to detect their traces today?

The Silurian Hypothesis: A Curious Proposition

The Silurian Hypothesis, named as a nod to a race of ancient, advanced reptiles in the British science fiction television series "Doctor Who," is a captivating proposition. This hypothesis, suggested by scientists Gavin Schmidt and Adam Frank, questions whether there might have been technologically advanced civilizations on Earth before us. More precisely, it ponders: if such ancient civilizations existed, would we be able to detect their traces today?

This hypothesis was not intended to confirm the existence of past advanced civilizations on Earth but rather to challenge our assumptions about the durability of the human footprint on our planet. It makes us contemplate, could any traces of an advanced civilization be eradica ...Read More

Ladder of Consciousness: Leibniz's Representation Hierarchy

In the quest to understand the essence of consciousness, few thinkers have offered more nuanced perspectives than German polymath Gottfried Wilhelm Leibniz. Whereas Rene Descartes famously distilled existence into his aphorism "I think, therefore I am," Leibniz responded with a different kind of cogito: "I represent that I represent, therefore I represent."

This Leibnizian definition of consciousness implies a hierarchy of representation, allowing for an empirical dissection of consciousness from the seemingly inanimate to the sophistication of human awareness.

In the quest to understand the essence of consciousness, few thinkers have offered more nuanced perspectives than German polymath Gottfried Wilhelm Leibniz. Whereas Rene Descartes famously distilled existence into his aphorism "I think, therefore I am," Leibniz responded with a different kind of cogito: "I represent that I represent, therefore I represent."

This Leibnizian definition of consciousness implies a hierarchy of representation, allowing for an empirical dissection of consciousness from the seemingly inanimate to the sophistication of human awareness.

The Inanimate and the Sensation

Leibniz posits that objects incapable of representing stimuli, like a stone, exist at the bottom of this hierarchy. They undergo events but express no discernible response. Converse ...Read More

Disrupting Time: How the Antikythera Mechanism Rewrites Technological History

Unlocking a Time Capsule

Unlocking a Time Capsule

Deep under the sea, the Antikythera mechanism was discovered in 1901 among the remnants of an ancient Greek shipwreck. Named after the Antikythera island, located between Crete and the mainland of Greece, this object is an artifact from another era, a glimpse into a world far removed from ours. Its origin dates to around 70–60 BC, but its complexity and precision would be at home in the industrial revolution almost two millennia later.

The Antikythera mechanism has challenged our understanding of ancient technological capabilities and is believed to be the world's first known analog computer. This intricate artifact, with its finely meshed gears and delicate mechanisms, has been studied extensively, revealing layers of innovation and craft ...Read More

Understanding the Consciousness of Complex Systems: A Journey from Digital to Analog Approximation

Complexity in Computational Systems

Complexity in Computational Systems

As our world grows increasingly connected and digitized, our computational systems are reaching unprecedented levels of complexity. Systems once relatively simple to understand, such as a desktop computer or a smartphone, have evolved into large-scale, intricate networks involving billions of processing units, memory elements, and interconnections. These systems, much like a complex ecosystem, now demonstrate intricate behavior and adaptation mechanisms that bear a striking resemblance to the intricate patterns found in natural systems - from the inner workings of a single cell, to the sprawling branches of a tree, to the complex social and physical dynamics of an entire city.

A New Paradigm: Analog Dynamical Systems

...Read More

Artificial Immune Systems: The Future of Cyber Defense

The immune system is a fascinating piece of biology. Its main task is to protect our bodies from foreign invaders. It's complex, adaptable, and can learn from past experiences. For years, computer scientists have been trying to recreate this biological phenomenon in digital form, leading to what we now refer to as artificial immune systems (AIS). Much like their biological counterparts, AIS are designed to protect computer systems from threats by learning, adapting, and reacting to them.

The immune system is a fascinating piece of biology. Its main task is to protect our bodies from foreign invaders. It's complex, adaptable, and can learn from past experiences. For years, computer scientists have been trying to recreate this biological phenomenon in digital form, leading to what we now refer to as artificial immune systems (AIS). Much like their biological counterparts, AIS are designed to protect computer systems from threats by learning, adapting, and reacting to them.

Artificial Immune Systems – What Are They?

Artificial immune systems are a class of adaptive systems inspired by the principles and processes of the human immune system. These bio-inspired computing systems are not a replication of the biological immune functions but, instea ...Read More

From Silicon Chips to Quantum Optics: The Future Generations of AI

AI has made enormous strides since the conception of the first digital computer. It has permeated into every facet of our lives, from email filters and voice assistants to medical diagnostics and self-driving cars. The substrates of AI - classical computing hardware and algorithms - have evolved over the years. However, we're on the cusp of an entirely new paradigm. Emerging technologies like quantum optics, memristor networks, and spintronic circuits are poised to revolutionize AI, offering enormous increases in speed, efficiency, and capabilities. Let's dive in.

AI has made enormous strides since the conception of the first digital computer. It has permeated into every facet of our lives, from email filters and voice assistants to medical diagnostics and self-driving cars. The substrates of AI - classical computing hardware and algorithms - have evolved over the years. However, we're on the cusp of an entirely new paradigm. Emerging technologies like quantum optics, memristor networks, and spintronic circuits are poised to revolutionize AI, offering enormous increases in speed, efficiency, and capabilities. Let's dive in.

Quantum Optics and AI

Quantum optics, a field that melds quantum mechanics and light, promises to revolutionize computing and, consequently, artificial intelligence. Quantum optical computing uses particles of li ...Read More

Unraveling the AI Revolution: The Resurgence of Neural Networks

Neural networks, the cornerstone of artificial intelligence (AI), are experiencing a renaissance. These clever systems, designed to imitate the human brain's inner workings, are now enhancing our lives in ways unimaginable just a few years ago. They help us navigate our cities, translate languages instantly, recommend movies to watch, diagnose diseases, and even drive our cars. But what exactly is driving this neural network renaissance? Let's dive in.

Neural networks, the cornerstone of artificial intelligence (AI), are experiencing a renaissance. These clever systems, designed to imitate the human brain's inner workings, are now enhancing our lives in ways unimaginable just a few years ago. They help us navigate our cities, translate languages instantly, recommend movies to watch, diagnose diseases, and even drive our cars. But what exactly is driving this neural network renaissance? Let's dive in.

Neural networks were born in the 1940s when scientists proposed models mimicking the human brain to perform complex calculations. However, the technology of the era was insufficient to fulfill this grand vision, and neural networks fell into a long period of relative dormancy. However, with the advent of modern computers and big da ...Read More

Natural Computing: Harnessing Nature's Algorithms for Problem Solving

In the vast wilderness of computing paradigms, an intriguing one is currently blossoming, and it's going back to our roots. "Natural Computing," an emerging field that seeks to harness the power of nature to tackle complex computational problems. From understanding the intricacies of neural networks to using the principles of evolution for optimization, natural computing is not only a radical shift in our thinking about computers but also carries the potential to revolutionize our problem-solving methodologies.

In the vast wilderness of computing paradigms, an intriguing one is currently blossoming, and it's going back to our roots. "Natural Computing," an emerging field that seeks to harness the power of nature to tackle complex computational problems. From understanding the intricacies of neural networks to using the principles of evolution for optimization, natural computing is not only a radical shift in our thinking about computers but also carries the potential to revolutionize our problem-solving methodologies.

The concept of natural computing is built on a unique perspective: nature itself is a computer. Look around you. The ants tracing a food source are solving a navigation problem. Your immune system fighting off a virus is a real-time conflict resolution scenario. The format ...Read More

Crashing Reality: The Intersection of Perception, Information, and Threat in the Digital Age

In the realm of science fiction, few books have enjoyed the cult following of Neal Stephenson's 'Snow Crash.' The tale weaves a vibrant tapestry of language, culture, and cognition, underpinned by a curious concept – the deadly 'Snow Crash' virus, capable of crashing both computers and human minds. It poses an intriguing question: Could information, in certain forms, pose a threat to our wellbeing or even our lives?

In the realm of science fiction, few books have enjoyed the cult following of Neal Stephenson's 'Snow Crash.' The tale weaves a vibrant tapestry of language, culture, and cognition, underpinned by a curious concept – the deadly 'Snow Crash' virus, capable of crashing both computers and human minds. It poses an intriguing question: Could information, in certain forms, pose a threat to our wellbeing or even our lives?

To explore this idea, we'll need to dip our toes into the science behind our perception of reality and delve into the realm of information hazards, also known as 'infohazards.' Through this journey, we'll learn how the fabric of human perception intertwines with the potential threats and safeguards associated with consuming information.

Perception: The Con ...Read More

Artificial Gaia Intelligence: Building a Global Brain

We've come a long way since the invention of the first computer. As we continue to advance in the field of artificial intelligence (AI), the concept of an artificial general intelligence (AGI) is starting to take shape. AGI, often imagined as an entity capable of understanding, learning, and applying knowledge at a level indistinguishable from human intellect, represents the next level of AI advancement. But there's another way to view the development of AGI — one that takes inspiration from the interlinked ecosystems of our very own planet. Let's explore the idea of AGI as an Artificial Gaia Intelligence (AGaI).

We've come a long way since the invention of the first computer. As we continue to advance in the field of artificial intelligence (AI), the concept of an artificial general intelligence (AGI) is starting to take shape. AGI, often imagined as an entity capable of understanding, learning, and applying knowledge at a level indistinguishable from human intellect, represents the next level of AI advancement. But there's another way to view the development of AGI — one that takes inspiration from the interlinked ecosystems of our very own planet. Let's explore the idea of AGI as an Artificial Gaia Intelligence (AGaI).

The concept of Gaia, or the Gaia hypothesis, proposes that all the Earth's biological, chemical, and physical components interact and combine to create a complex, self-r ...Read More

Beyond Human Perception: How LLM AI Models Are Unveiling Hidden Truths

In the world of artificial intelligence, breakthroughs seem to occur at an unprecedented pace. The most recent leap in AI technology comes from the emergence of Large Language Models (LLMs), the most advanced of which are currently pushing the boundaries of our understanding of the world. These high-powered, versatile models are now equipped to reveal to humanity truths we have been unable to perceive up until now.

In the world of artificial intelligence, breakthroughs seem to occur at an unprecedented pace. The most recent leap in AI technology comes from the emergence of Large Language Models (LLMs), the most advanced of which are currently pushing the boundaries of our understanding of the world. These high-powered, versatile models are now equipped to reveal to humanity truths we have been unable to perceive up until now.

AI's ability to process vast quantities of information is already well established. The models can swiftly and accurately sift through data that would take humans centuries to fully grasp. However, the new generation of LLMs, such as OpenAI's GPT-4, have been trained on even more diverse datasets, enabling them to make startlingly accurate predictions and deliver comple ...Read More

Tower of Babel: LLM Technology and the Reconciliation of Tongues

It’s a story as old as civilization itself, imprinted in the annals of our collective psyche: the Tower of Babel. In this biblical narrative, a unified humanity once spoke a common language, fostering unparalleled cooperation and innovation. As humanity sought to challenge the divine, constructing a tower reaching for the heavens, God, in response to their hubris, confounded their language, thereby dispersing them across the globe. From this tale was born our world's linguistic diversity, creating both a fascinating richness and a barrier to global understanding. Today, we stand on the brink of a new era, one that could metaphorically undo this ancient division through the magic of technology: Large Language Models, or LLMs.

It’s a story as old as civilization itself, imprinted in the annals of our collective psyche: the Tower of Babel. In this biblical narrative, a unified humanity once spoke a common language, fostering unparalleled cooperation and innovation. As humanity sought to challenge the divine, constructing a tower reaching for the heavens, God, in response to their hubris, confounded their language, thereby dispersing them across the globe. From this tale was born our world's linguistic diversity, creating both a fascinating richness and a barrier to global understanding. Today, we stand on the brink of a new era, one that could metaphorically undo this ancient division through the magic of technology: Large Language Models, or LLMs.

LLMs are artificial intelligence models trained on vast ...Read More

The realm of quantum physics, which defies our classical understanding of the world, introduces phenomena that seem almost magical. One such phenomenon is quantum entanglement, a property that allows particles to instantaneously affect each other, irrespective of the distance separating them. While entanglement has mystified scientists for decades, it is increasingly viewed as a cornerstone for advancing artificial intelligence (AI). This article explores the intricate concept of quantum entanglement and its potential applications in AI.

The realm of quantum physics, which defies our classical understanding of the world, introduces phenomena that seem almost magical. One such phenomenon is quantum entanglement, a property that allows particles to instantaneously affect each other, irrespective of the distance separating them. While entanglement has mystified scientists for decades, it is increasingly viewed as a cornerstone for advancing artificial intelligence (AI). This article explores the intricate concept of quantum entanglement and its potential applications in AI.